The 3 models of the digital transformation

The core element of the digital transformation (or the big data equation) is the way that we think about data. Because, the transformation that we have to realize is also our mindset transformation and the way that we are looking at the data of our world.

In the book “Word of null-A”, Van Vogh was suggesting that the words that we use in our vocabulary influence the way that we can think about the reality. For example, in our country, we have one word for snow whereas Inuit have 50 words to describe it and express the complexity and diversity of their environment.

If we look at the way we define data, we quickly realize that most of our expressions are associated, for a long time, with the concept of water. We describe the activity on our data (often associated with knowledge) with expressions tied to the liquid world : we filter data to remove dirt and clean the essence, spread knowledge, identify flow of information, collect data sources into data lake ….

Even the idea of cloud emphasise the image of a dynamic state of water where particules are dynamic and interact more easily with each others.

This model works fine when we have to address the data creation and data collection. Because, digitalization is, first of all, the ability to transform information from non-digital form (images, sounds, letters ,,,) into a numerical form that can be processed.

We easily understand that the data created has to be cleaned by appropriate filtering to reach a form that can be processed. This evolution started by converting the reality into dedicated models that shapes the data for a particular usage.

For example, the traffic management model see you as a car moving from a point A to a point B, that will use the road infrastructure and, your behavior can be, on the way, leveraged by a set of traffic light and drive regulation. The social security model see you as a married person, 40 years old, 3 kids with an Hispanic origin.

Later on, this method of data creation was reaching its limit as each of those models were providing an independent views of the reality (that only keep the attributes that the model allows) and were difficult to combine. The method has evolved to a more open view of the reality where objects are classified in collections and each object can have different number of attributes. All those data are collected in this raw form for further use as we consider that the value is in the volume and the ability to process the information.

This evolution is called Big Data, and more technically, associated with the evolution from relational databases to non-relational storage (collections) due to the methods used to store the information.

More information on Why companies are jumping into data lakes

However, this evolution is still built on the image of water that help us focus on the data collection important notions. The information has to be collected from different activities that are the sources of the information. This process has to be efficient to avoid losing information (like drop of knowledge) in the collection process and is constrained by the ability that the infrastructure have to process the dataflow. The information has to be cleaned by appropriate filters and combined in the company warehouse as a data lake so that the processing of the information can be done easily by transformation models.

This image reaches its limit when we need to project our mind to the use of the data. Due to the concept of water, we implicitly look at the data as a limited resource that needs to be preserved (sometimes hide) from others.

Of course, we project our use of the data by analogy with the life cycle of the water and identify traceability of the information in the history of the transformation. But, even if we can identify some value in data conversion (like water to electricity), our brain is still stuck in a limited view of the use of the data.

The real potential of the data is to be able to be transformed and combined to create new value, like a chemical reaction that create a new structure by recombining atoms that have more properties together that each atom alone. To embrace this idea, we need to associate data with another concept more close to it : wood.

We are used to have around us trees which is the more natural form of wood. If we put aside all the valuable price of trees for enabling life, we will restrain our vision of tree as potential source of revenue by the volume of wood that they represent.

We can collect trees or have process that make them grow. We can sell them as a whole (limited benefit) or cut them and prepare them for basic utility like pack for camp fire. However, the revenue generated for this activity is limited compared to the effort to produce it.

We can increase the value by transforming the wood; each transformation can lead to a different type of revenue.

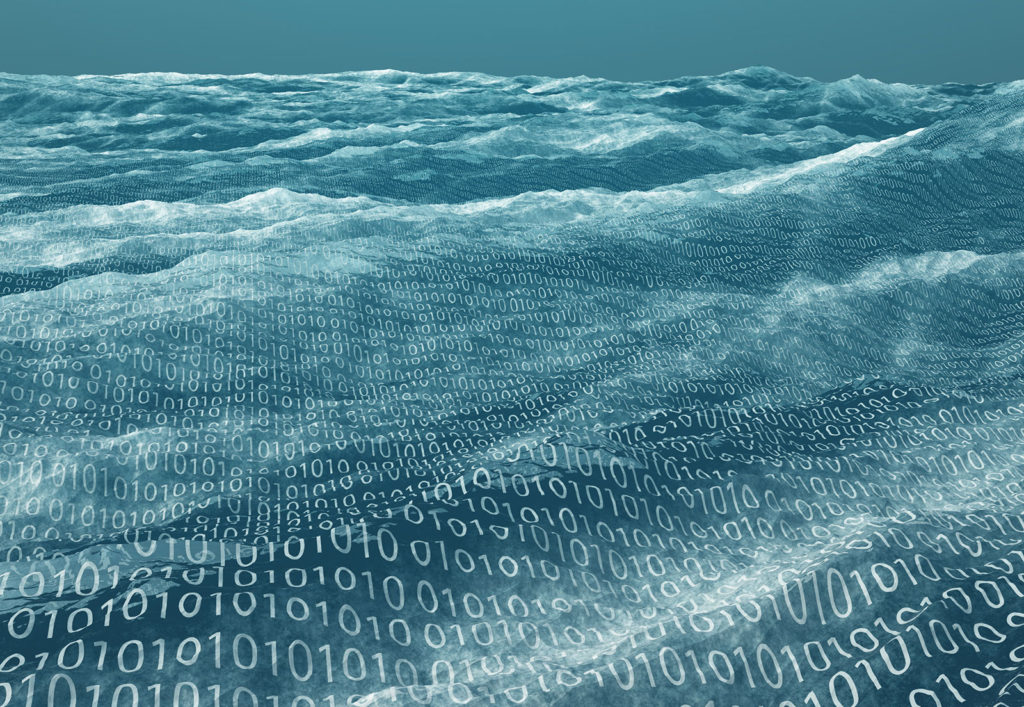

We can first carve and transform the data for art or visualization which will represent (or not) the reality. In this example, the structure of internet network is either represented as a tree of connections between nodes or as a geographical location of data on a world projection.

More information on network model

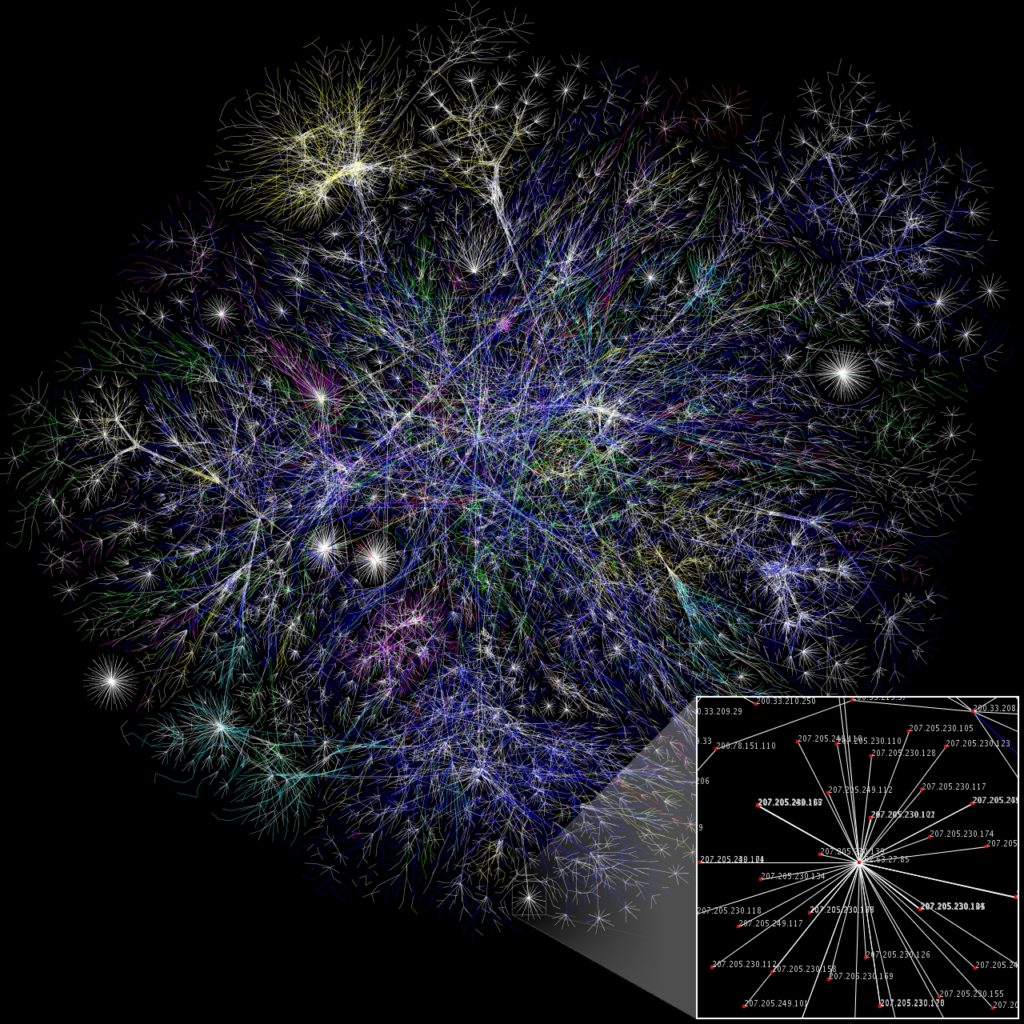

The information can also be combined to create knowledge like this simplified representation of MRI images.

From a simple stream of measures of opacity made by a rotating instrument, a new 2D representation is created of a slice of the body by applying aggregation and filtering.

Then, several horizontal slices of a patient are combined, to generate a 3D model of a body by profil shaping and triangulation.

Finally, the result can be used to facilitate diagnostic or prepare patient operation.

More information on optical topology

Data can be transformed also for direct decision making.

On this example, the 3D model of a building is combined with a ray tracing to be able to understand the shape of the generated shadow and adjust the general architecture of a leisure center.

This application is often used to create a common ground between actors looking at different dimensions of a system.

More information on Fracture – collaborative design

Data can also be created as foundation of bigger usage.

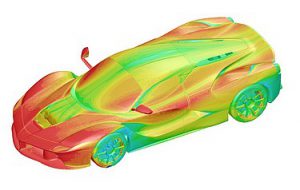

In this image, the measures of the wind friction is projected on the 3D model of the car so that shape and material design can be adjusted to optimize the resistance created by the car in motion. Similar synthesis are used to identify vulnerabilities or stress generated on physical structures like wings.

More information of Ferrari profiling

Finally, data can be used to protect more valuable assets. In the domain of intrusion detection, we are evolving from the concept of gates (like firewall) that are relying of small set of informations that needs to be provided to go through the filter, to more broad models based on monitoring agents that will instead detect anomalies on the data flow. Intrusion in a house while owner is at work, use of the phone location to allow or block credit card transaction, detection of bodies going in the wrong direction at the customs are example of protection by data transformation.

So with this new model, we can support our incremental mental transformation to understand the value of the transformation and the ability to create data from data. However, to fully embrace the change, we need a last model that will anchor, in our mind, another aspect of the data value.

As an introduction, we need to look at the example of recent Strava news. Strava is an application that “connect millions of runners and cyclists through the sports they love”. The application provide value to their users by tracking their performance and allowing them to compare them to other athlets.

More information on Strava release

When Strava release the new version on last november to propose a heat map of running activities to their users with the purpose of promoting social network, several organisations unfortunately discovered that many military installations have been exposed by those data.

As many soldiers have healthy practices even in tactical missions (encouraged by official programs like the 2015 equipment of 20000 soldiers with Fitbits), a pattern detection on this heat map of unusual activities in desert areas (or around squared structures) can be used to unreveil military or secret bases.

This example highlight the fact that several values can be created from the same set of datas.

The last model that will allow us to understand the possibilities and the dimensions of the data is a model from our own behavior : a neuron.

More information on neurosciences

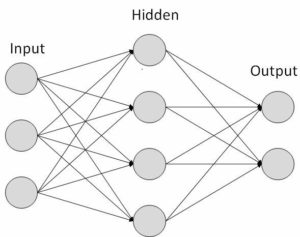

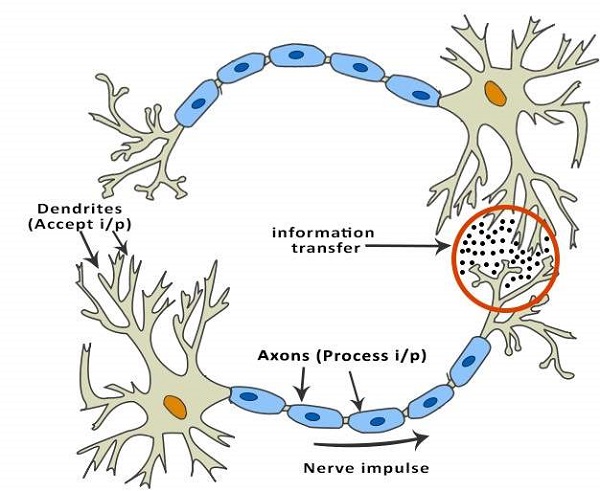

A neuron model is a processing approach that is based on the creation of value by incremental evaluation. As a simple overview, a neuron can be defined as a combination of three elements :

- a network of relations that observe one unique information

- a process of evaluation that give a weight on each relation, decide on a unique result and compare the result to the reality.

- a process of adaptation that review the network of relations based on their likeness to the reality

An example of neuronal model can be found in weather prediction.

A neuron is associated to each pixel of a satellite image and is tasked to predict the color of the pixel in the next satellite image. To do so, each neuron has a network of relations of two types : observation of the current color of several pixels around the reference point, observation of the prediction of the others neurons around the reference point.

Based on this setting, the neuronal network will provide an estimate of the next cliche with a confidence level.

More information on neural network for weather prediction

The neuronal model is a powerful approach that mimic our brain and bring us away from a believe that we can always build a predictable representation of the reality by an initial exhaustive analysis.

In the neuronal model, the value creation is done by starting from an initial state and progressing by iteration of evaluations between the projected output and the observed one.

Often used in data science, the neuronal model have the benefit to increase its value with time and to provide value as a grid more than the individual itself.

More information on neural network

To grasp this value, we need to look back at the human neuron on two aspects : prediction and speed

In term of prediction of the evolution of a neuronal network, several laboratories experiences shows the following measures

- A unique neuron behavior can be predicted 100% based on the chemical state of each of its input.

- A network of 2 neurons can only be predicted by 50%

- A network of 3 neurons can only by predicted by 10%

More information on information transfer

In term of decision speed and efficiency of a neuronal network, literature, like the Nobel price of economy Daniel Kahneman, describe our brain as a combination of 2 systems :

- The system 1 is an automatic fast and often unconscious way of thinking that evaluate the environment and require little energy or attention

- The system 2 is an efforfull, slow and controlled way of thinking that require energy and can’t work without attention.

Often simplified with the idea of the cost of focusing on a subject, the system 1/2 model highlight the fact that our behavior is driven by two complementary approach : automatic evaluation based on patterns (fast but subject to biases and systematic errors) and controlled based on rationals (but slow and able to process limited data).

Take the time to read this book Thinking, Fast and Slow

Back to the value creation on the data, this model brings our attention on the fact that their is also a value created by the observation of the evolution of a data point and by the design of a predictive model that will isolate pattern of interest.

Of course, as highlighted by the author and several similar studies, the value fully resides on the ability to find the right patterns that observe the reality, create prediction with accuracy and improve over time.

In more technical term, the core value of the neuronal model resides on the ability to have an accurate data sampling (network), a good rational evaluation process and the ability to update the patterns from the result of the rational evaluation.

To do so, the common approach is to build a neuronal model, to run it on an important set of data, and to adjust the neuronal model based on the convergence (or not) to a reliable prediction.

To give an example, scientists have been researching for years the ability to synthesize emotions from pictures. The applications are numerous like restoring vision to persons blind after an accident, robotics or limiting the traffic on internet by the use of avatars.

For many years, the research results were limited by a model based on degradation of the picture quality by frequency analysis. An important progress (and speed) has been observed by rerunning on the same data a model based on pattern detection that looks at similarity to standard expressions and body posture.![]()

As a last application of the neuron model, we should look at the live cycle of data.

Faced to constraints of data privacy (GDPR), cost of data storage and other similar limitation, the image of water or wood makes us look at data destruction as needed process to reduce our expenses but associated to a reduction of our knowledge or capability to make decision. The neuronal model propose value on the pattern more than on the data.

To give a simple example, most of our sciences, that have to process a large amount of data, try to isolate patterns that enable easy processing : history define periods, anthropology define civilizations, chemistry define periodic table, mathematics define axioms and standard properties …

With this mindset, whereas the destruction of data limits our ability to train new neuronal models, the exponential trends of data creation and centralization can be used to focus on the data and the creation of patterns that are our specific expertise and seek for others data from the network.

By this approach, we limit the data scope of our system to a perimeter centered on the specific domain value creation.

In summary, to operate a digital transformation, we need to use the combinaison of the 3 models to be able to create several value propositions

- Based on water for data collection, data synthesis

- Based on wood for data transformation and enhancement

- Based on neuron for data correlation and pattern detection

And the most important, we need to shift from a pre-canned approach of the value creation to an incremental process that use the value created to discover new value and adjust the solution delivered.

All free images are coming from www.pexels.com

One Response

[…] a previous post The 3 models of the digital transformation, I highlighted the fact that a data (or the information contained in the data) is versatile and can […]

Comments are closed.